16 May 2018

|

Machine Learning

Computer Vision

Word Embedding

Social media is flooded with various categories of photos: food, travel, selfie, etc. Unless the user explicitly mentions, the category of the photos uploaded is left unknown. For the purpose of creating meaningful value from the social media photos, it might be useful to have a scalable model to classify which category each photo belongs to.

For this particular problem, using a supervized learning algorithm might not be scalable for several reasons:

- Supervized learning requires large dataset to train on, but it is hard to acquire large quantity of social media photos due to privacy issue.

- Even if there is such dataset available publicly, it is unlikely that it also contains image category labels.

- EVEN IF there is both data and labels available, hauman-labeled categories suffers risk of biasness. Some of the photos could sit in between two possible cateogries, who gets to decide which?

Hence, using supervized learning algorithm is not a smart choice for this case, which leaves unsupervized learning algorithm pretty attractive option.

The unsupervized image categorization takes a couple of data processing steps:

- Image item labelling using Google Cloud Vision API

- Vectorization of images using Word2Vec

- Clustering the image vectors by K-Means algorithm

1. Image item labelling using Google Cloud Vision API

Google Cloud Vision API provides labels for items appeared in the image together with the probability and topicality values. Probability value indicates how accurate the given label is to each item, and topicality value indicates the significance of the item with respect to the rest of the items inside the image.

After running the Image Labelling API through each image, I have a list of item labels and their respective probability and topicality vales which are float numbers in between 0 and 1. These two values are used in the next step for weighting image vectorization using word embedding.

2. Vectorization of images using Word2Vec

Using the item labels for each image, the algorithm then imports pretrained Glove Word2Vec model to convert image labels into vectors. Each word vector is further weighted by the coresponding probablity and topicality values by multiplying them. Since there are generally more than one label for each image, the algorithm averages word vectors assigned for each image, producing a single centroid vector that best describe what the image is about. In the end, each image is converted to a numberical vector, so that it can be processed by Uclidean arithmetic.

3. Clustering the image vectors by K-Means algorithm

Once all images are converted to vectors, the algorithm applies K-Means algorithm to cluster images with similar characteristic, which is bascially the type of items it contains inside.

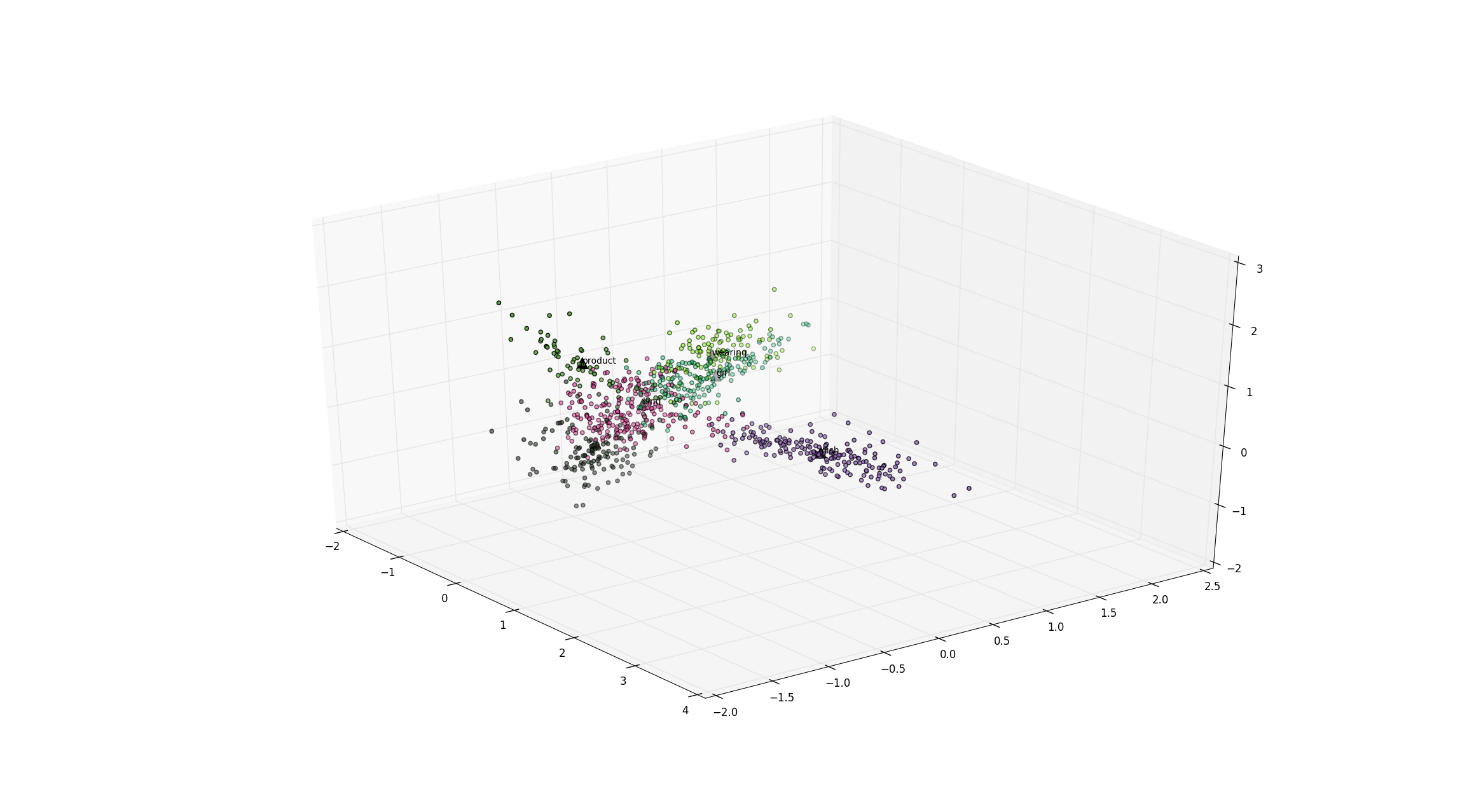

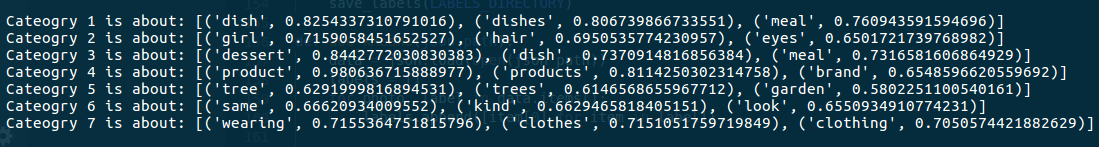

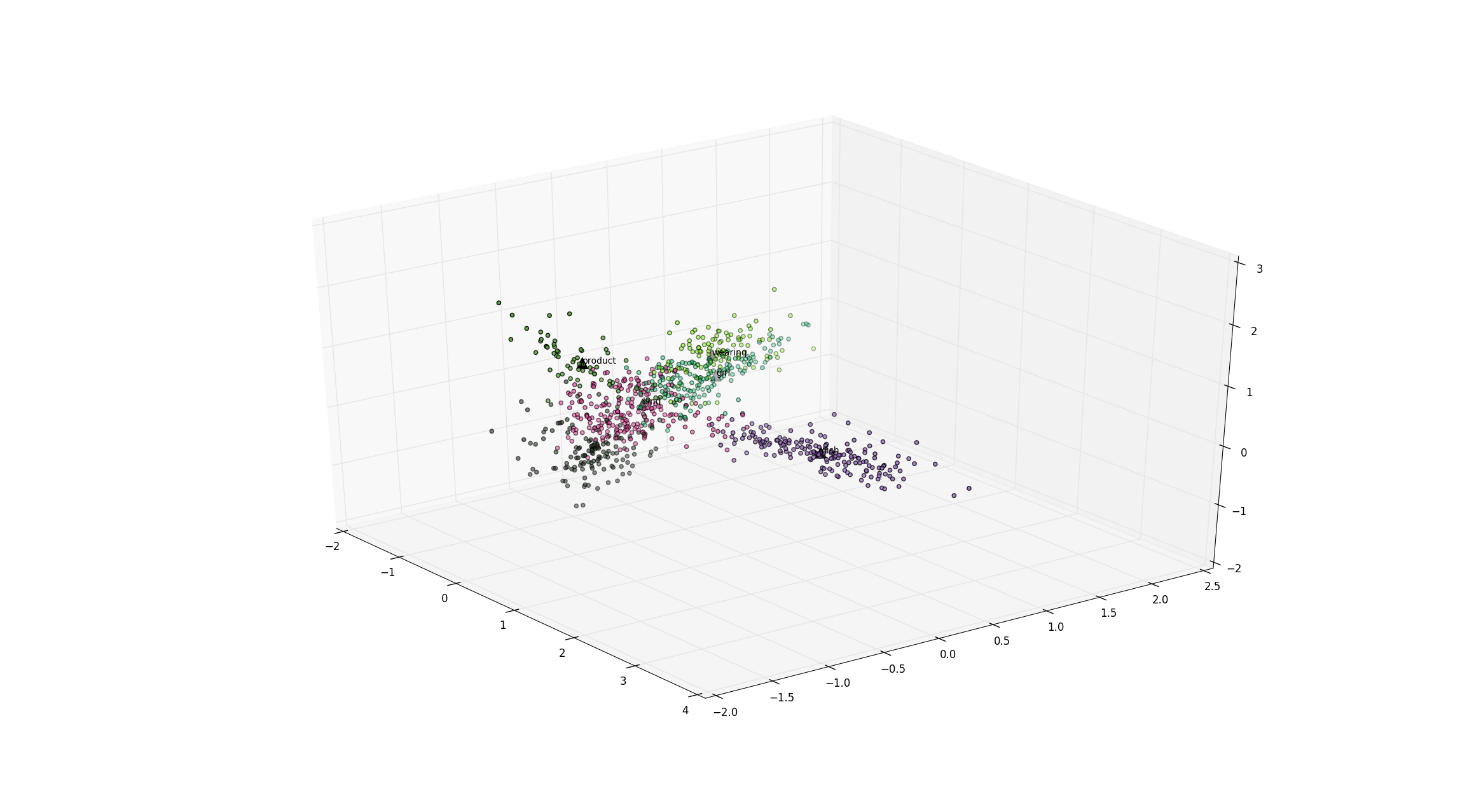

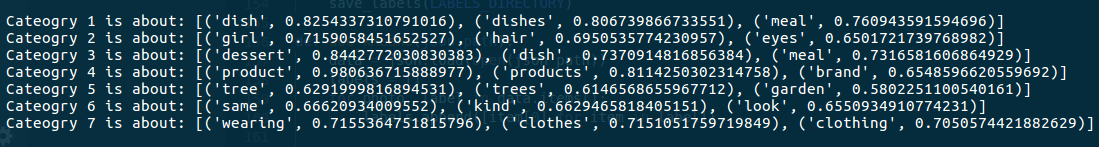

The figure above is the visualization of the image vector space whose dimension reduced to 3, using Principle Component Analysis (PCA). The data points with the same color indicate that they belong to the same image category. In order to know what each image category represent, the programme finds the centroid vector for each image cateogry and look up the Word2Vec model to list words with the closest distance.

As shown by the print message, it is pretty obvious that cateogry 1 contains images related to food, while category 7 contains images related to clothings and fashion. Initially, the dataset contains uncategorized images scraped from Instagram such as these,

and classify them into arbiturally genrated categories such as food,

products,

and travel.

Summary

This technique categorizes image dataset into a couple of arbitrary topics, based on the items spotted in each image. It first labels item labels inside each image, convert them into semantic vectors, and cluter them using K-Means algorithm. Bare in mind that the accuracy of the categorization is dependent on the accuracy on the Google Cloud Vision API and the pretrained Word2Vec model. There were few outlier images which did not give out any label when run on Cloud Vision API Labeling, which are better to be excluded out and categorized as “No Cateogry”.

As described above, this technique is an unsupervized learning algorithm. This means that instead of setting the categories and classifying each image into respective category, the algorithm classifies all the images first then label each image cateogry based on the common characteristics of clustered images.

05 Sep 2017

|

CS3216

Web Application

There are several take-aways from Flowx that I can apply for my own app development:

1. “Free Users vs Primium Users” nailed it

It’s every application’s dilema that they have to charge the users somehow to generate revenue. The main problem is, app users generally have a low tolerance towards payments; they rather go for sligtly inferior free solution rather than paying for premium service. Flowx actually has Free Users and Premium Users distinction, and they provide different level of utilities to each group. What I found laudable of Flowx’s pricing policy is that they managed to separate the user experience by payment, while also satisfying both groups to a certain extent. To free users, Flowx provides basic features such as realtime rain condition and wind condition, which is just enough utilities for average users whose purpose for using the app is mainly to check the daily weather condition. On the other hand, Flowx provides over 30 types of data features to premium users, fulfilling the needs for Power users who want those meteorological data for more professional purpose. In the end, free users end up satisfied with the service because that’s exactly what they are needed, and premium users also end up satisfied because they will get the utilities they want which are not opened to Free users.

2. Too much UI, but too bad

By its nature as a meteorological app, Flowx has to display a lot of information on the screen simultaneously. They tried their best to make UI recognizable, but still some of the fonts and images are too small to recognize because everything, including the giant map, has to be crammed into small smartphone screen. Probably, this is the reason that it is relatively harder to find meteorological in mobile compared to desktop environment, because meteorological data inheritably requires a large screen size to be displayed properly. This taught be that when choosing the idea for mobile app, it is important to realize potential inherited limitations of developing in mobile environment, and if the limitations are too much, I might have to pivot to another idea instead.

3. Singapore is too small for this

One thing that the presenter pointed out was that even in the maximum zoom, Singapore is too small in map to be able to provide meaningful weather data to Singaporean users. This point is important because when an app’s target audience is in the global scale, a feature might work in one region but not so much in another due to various limitations. Flowx might have lost significant number of Singaporean users due to their map resolution limitation, and potentially other reasons that weather data in microscopic scale matters. Hence, when designing an application, the developer has to take into consideration of different conditions in different markets, and adapt to the market condition as much as possible.

My Thoughts

While the idea of meteorological app is definitely cool and the UI & UX are done decently, but I believe there are some innate limitations of this application, especially in terms of generating revenue. During the presentation, the group proposed potential business ideas targeting restaurants and outdoor activities, but basic weather data such as rain activity is also available to Free users. If Flowx makes rain activity as a premium feature, they are going to lose huge number of Free users so it is not a smart move. In terms of providing advanced meteorological data to Power users, there are no significant reason that it has to come with mobility. Since desktop application provides much better experience than mobile application for the same concept, Power users are much more likely to use desktop app instead. Hence, while the app managed to attract large user pool with their cool idea and design, but I foresee limited business opportunities from their app.

27 Aug 2017

|

Jitsi

GSoC

WebRTC

My project in this year’s Google Summer of Code was to implement Micro Mode for Jitsi Meet Electron project, and to work on the foundation for Jitsi Meet Spot project which was newly launched by Jitsi recently.

GSoC Blog Posts:

https://han-gyeol.github.io/categories/#gsoc

Two repositories I contributed during this year’s GSoC:

Jitsi Meet Electron

Jitsi Meet Spot

Things I have learned from the project

- WebRTC P2P Connection

- Node.js

- Object-oriented ES6

- Electron

- React

Challenges faced

- Creating the Micro Mode’s remote video component faced immense amount of troubles due to the limitations of Electron’s inter-windows communication. In the end I used WEBRTC’s RTCPeerConnection feature, which also has its own set of limitations.

- Some of the features available in the latest version of Chromium were not available in Electron becasue the version of Chromium embeded in Electron was not the latest release. For example, I was not able to use MediaElement.captureStream() feature in the Electron BrowserWindow.

- WebRTC technology is still under development and many of its features were not supported by the browsers. For example, I had no choice but to use deprecated methods like RTCPeerConnection.addStream() instead of RTCPeerConnection.addTrack() because addTrea() was not supported in Chrome yet.

- The performance of the Micro Mode was not optimal as it takes up substantial amount of resources when in use, causing occasional lags when it is run together with the main Jitsi Meet Electron process. This was alleviated by firing up the Micro Mode lazily instead of on start.

- In my implementation of Micro Mode’s toolbar, there were unnecessarily complicated layers of interfaces & abstractions I failed to resolve. These could be solved by allocating more tasks to the render process of Electron, instead of the main process. However, this is against Electron design principle which is to keep the render process as simple as possible and make it only responsible for user interface.

Conclusion

Google Summer of Code was my first experience of professional coding, and it certainly opened my eyes in contributing to an open source project. I have learend that frequent communication with the mentors and knowing my limits are essential to succeed in teamed programming. It was such a shame that I wasted too much time figuring out solutions for the problems that is beyond my capabilities, ended up spending less time on writing actual codes. At the end of the day, it was good to know that the amount of time I spend on the project does not necessarily reflects the amount of works I have done after the project is ended. Next time, I would definitely ask other people’s opinions more and clarify the development direction, before I start working on the actual code writing. Lots of appreciation to Saúl and Hristo for guiding me through the project, and Google for giving me such a precious opportunity.

22 Aug 2017

|

Jitsi

GSoC

WebRTC

I have started working on the next project, Jitsi Meet Spot. Jitsi Meet Spot is a video conference application powered by Jitsi Meet, which is suited for a physical conferecne room environment rather than a personal desktop environment.

https://github.com/jitsi/jitsi-meet-spot/pull/1

My job was to set up a HTTP server that receives command from clients, and uses JitsiMeetEXternalAPI to initiate the meeting, or control the conference setting such as muting the auido/video.

The HTTP command consists of two components: command type and arguments. Currently, it is missing the client side application, so the server can be tested by sending curl request.

curl --data "command=<command.type>&args=<arguments>" <targetURL>

The supported commands are:

- join conference

- hangup

- toggle audio

- toggle video

- toggle film strip

- toggle chat

- toggle contact list

07 Aug 2017

|

CS3216

Web Application

Web development has a very low barrier of entry apparently, looking at the internet flooded with web development tutorials. That is probably the reason that thousands of applications are released every months, which majority of people have never even heard of. The biggest trap many app developers fall into is disillusion of usefulness of their apps. Most developers try to solve problems that are not so much of troubles. They identify an inconvenience in people’s lives, develop an app that alleviate or eradicate that problem, and then find out that people find using that app more troublesome than actually solving the problem the app solves. If an application is going to be used by people, its usefulness must outweight the trouble it causes by making people use it. Almost all developers have a sense of proud for their work done, and are often misguided to think that other people will love their app as much as they do. But sadly, this is almost always not the case.

In order for an application to be successful, its value must come from the developers themselves, not others. All app developers must try to solve THEIR problems first, instead of solving OTHERS problems. Because only by this way, developers have a clear knowledge of ‘how troublesome’ the problem is, instead of trying to guess over other people’s opinions about the problem. This is exactly how many great softwares are born. Git was created because a Finnish developer found the existing version control solutions were a complete mess, so he decided to solve HIS problem by making a new version control solution that HE wants to use. And now, everyone uses Git.

In my CS3216 journey, I would like to identify problems in my life that I WANT to solve, create an application that I WANT to use. And then, I will release the app to the public, and if there are anyone who find it as useful as I do, that is one more useful app developed for others. I am really excited for the lectures and workshops which will feed me with many useful web technologies that I can use for my projects. However, I find CS3216 more of an opportunity than a learning; its resources, teammates, and great mentors attract me to this module more than anything. I would like to dive into the world of app development and test if my app development philosophy is indeed a worthy one.